The researchers found that using a Convolutional Neural Network (CNN) enabled detection of fish sounds that both human analysts and traditional spectrogram data analysis otherwise could not detect. The CNN was trained using hydrophone recordings made in British Columbia, but proved accurate in the novel environment at PortMiami, even despite significant background noises from boats. The software developed for this study is open-source and available to other researchers.

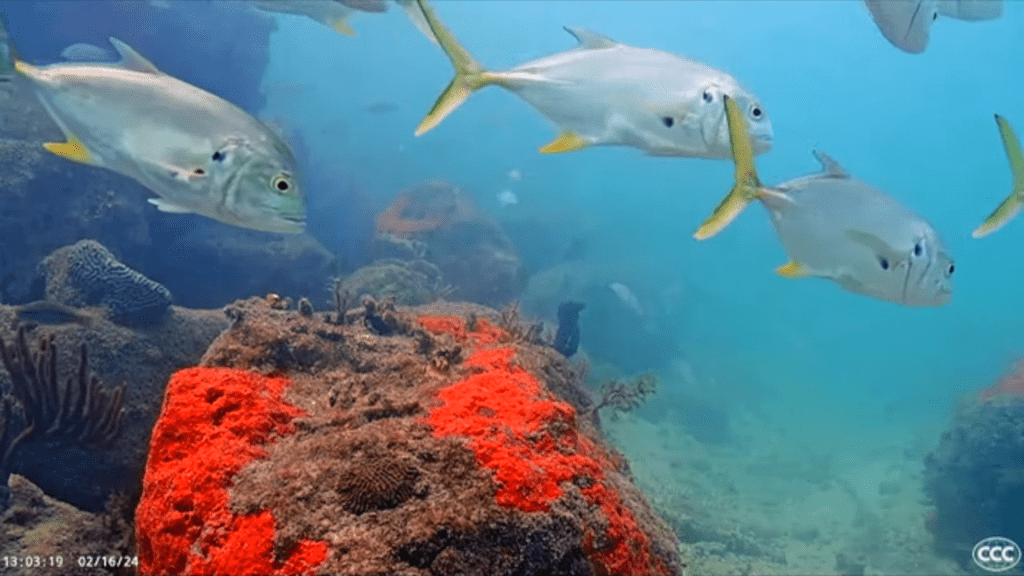

Stay tuned in the coming months as we prepare to connect a hydrophone to the Coral City Camera and provide an audio channel to the YouTube livestream. If possible, we aim to incorporate real-time analysis of the underwater sounds to help monitor and track fish activity.

0 Comments